I still contribute to the civic-tech community called Česko.Digital, and we created a new project. We are storing all static assets to our S3 bucket accessible via CloudFront. This article describes how we simplified uploading these assets.

Main Motivation

Česko.Digital needs storage for public assets for several reasons:

- We are not storing images for our blog to the repository to reduce the Git history size

- We have generated data, for example, the book translation or machine-readable list of all cities in Czechia.

The first solution was simple. Tomáš Znamenáček (Zoul) and Martin Wenisch configured a simple S3 bucket with CloudFront pointing to domain data.cesko.digital. All scripts for automated data upload were uploading to this bucket. Zoul was responsible for uploads because he is usually publishing all new blog posts.

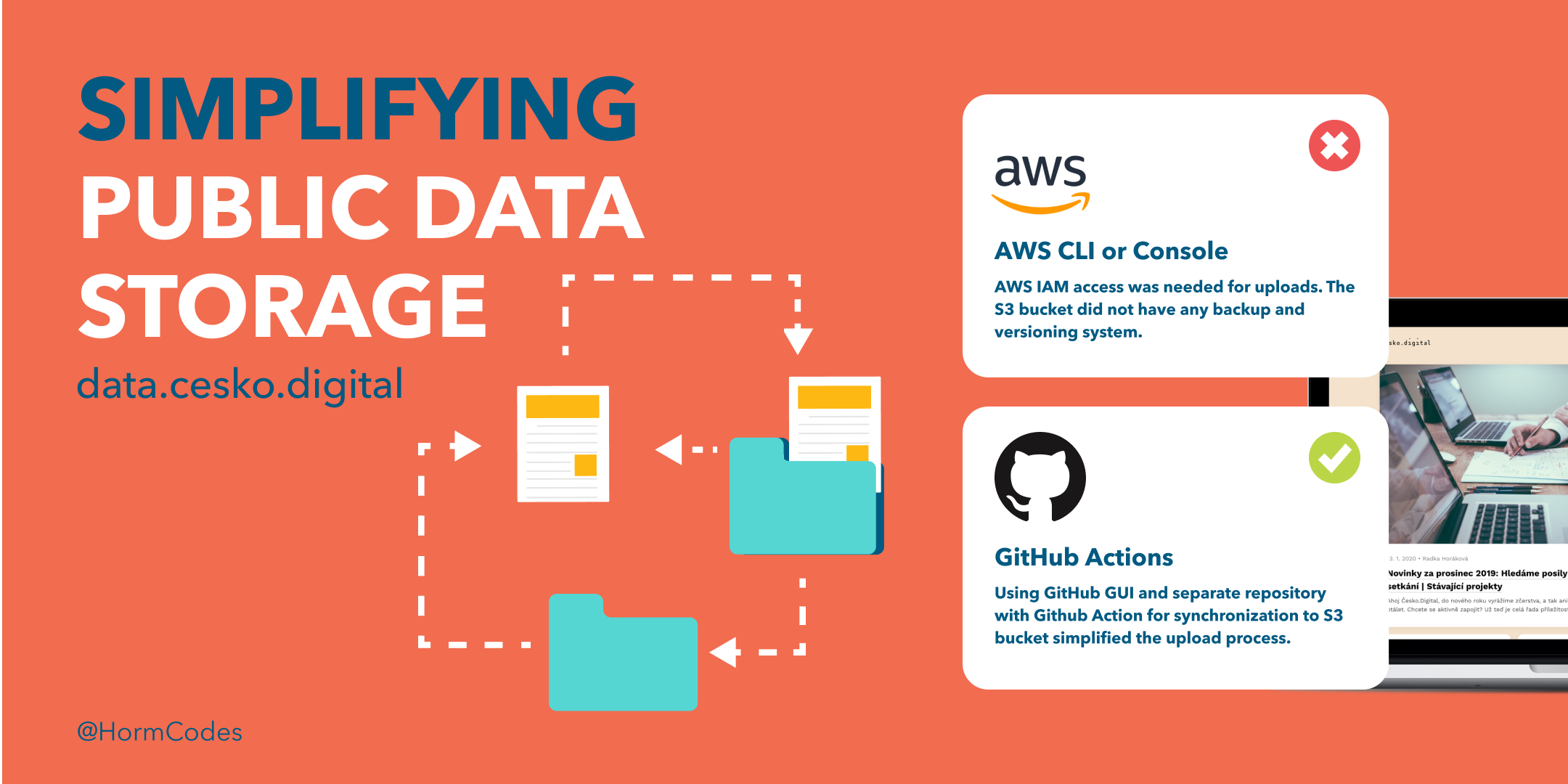

It works well, but there was some room for improvements:

- Only people with AWS IAM access could upload new files.

- Uploads were possible only by using AWS CLI or AWS Console.

- The S3 bucket did not have any backup and versioning system.

Architecture and Implementation

First, we opened a GitHub issue for discussion and solution specification. The initial suggestion was to create a simple application. But then, Martin had a great idea to use GitHub Actions.

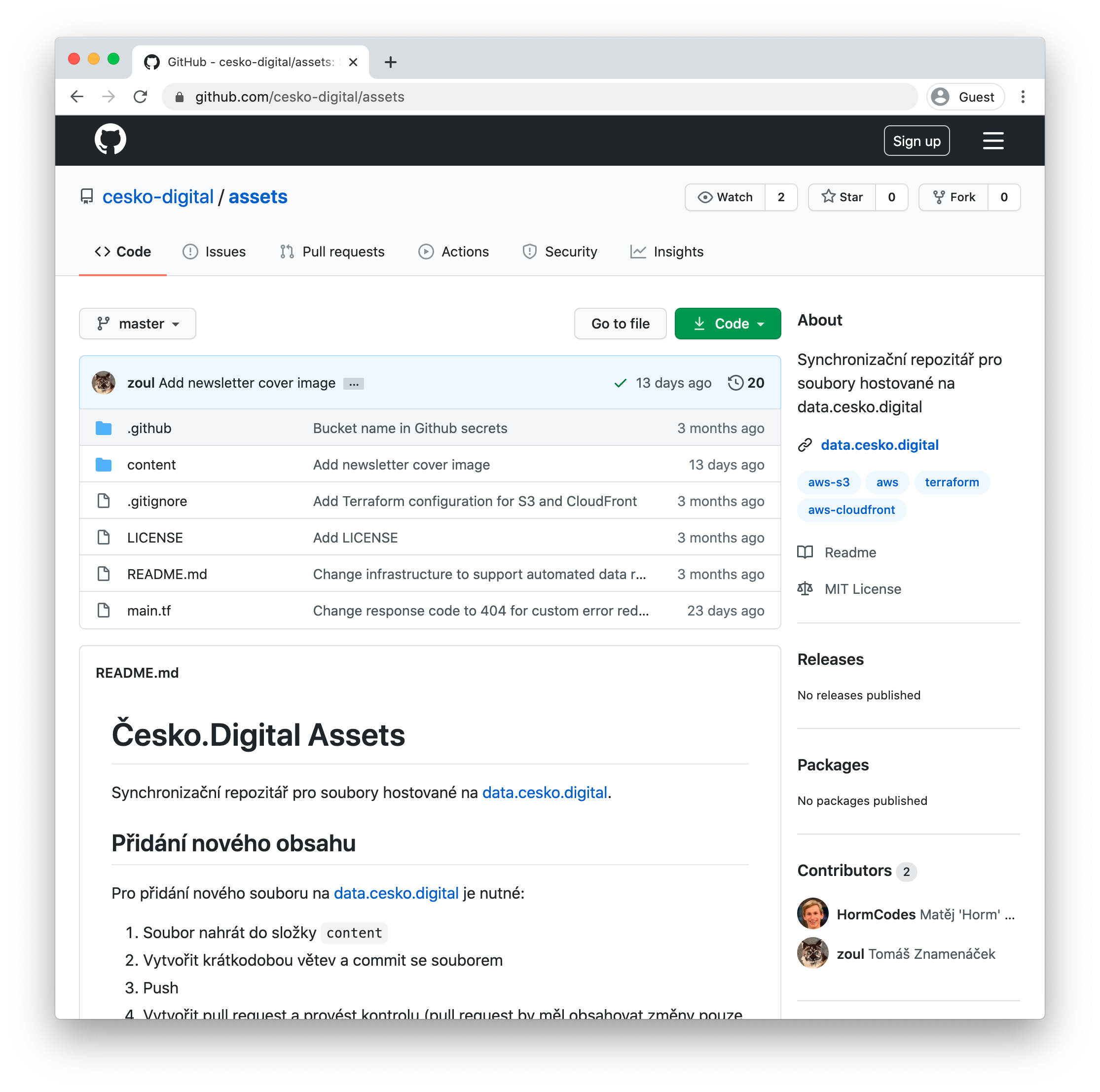

The idea was to have a separate GitHub repository for assets and configure GitHub Action for content synchronization. The advantage of this solution is Git versioning and backup for the content. I looked for similar solutions for our need for public data storage, but there was not any. I decided on this approach, and I implemented the synchronization.

I knew I wanted to use Terraform for configuring the AWS setup. AWS is a complex service. I recommend having infrastructure as code because it is practical for prototyping. Terraform helps you to set up, version, and remove any AWS infrastructure. Martin has experience with Terraform, and he was doing code reviews for me. About the S3 upload, I found this existing solution: jakejarvis/s3-sync-action.

All sources are available on GitHub here, and I would like to highlight a few design decisions:

- We have two S3 buckets. One is for manual uploads, and one is for generated data. CloudFront has a configured origin group with a fail-over bucket. CloudFront is transferring a particular request to the bucket with generated files in case a file is not in the primary bucket.

- We have an error page for invalid URLs.

- Sync Action is uploading all files from the content folder and invalidating the CloudFront cache. It was necessary to add a delete flag to handle file removals.

- We configured GitHub action for infrastructure changes. We are using a separate S3 bucket as a Terraform backend. The repository also contains the CODEOWNERS file. This file prevents unwanted infrastructure changes that can bring extra costs.

Everybody can use GitHub GUI for uploading a new file right now. The file upload process is very transparent and clear. Maybe we will add a layer like NetlifyCMS on top of this architecture. It would hide the committing process, but it does not have a big priority at this point. I enjoyed this project because it was fun, and I learned some new things about Terraform and AWS. Thank you, Martin and Zoul, for your help and for being part of this project.